Yunpeng ZhangI am currently leading the large model department of PhiGent Robotics, focusing on end-to-end autonomous driving, neural reconstruction, and large models. Prior to that, I obtained the master degree from the I-VisionGroup, part of the Department of Automation at Tsinghua University in 2022, where I worked on the monocular 3D object detection for autonomous driving under the supervision of Jiwen Lu. In 2019, I received my bachelor degree from the Department of Automation, Tsinghua University. We are actively hiring full-time employees and interns for motion prediction, end-to-end driving, neural reconstruction with 3D Gaussians, and world models, feel free to drop me an email at yunpengzhang97@gmail.com if you are interested. |

|

ResearchMy research experience encompasses a variety of applications in autonomous driving scenarios, including vision-based 3D object detection, online mapping, occupancy estimation, motion prediction, end-to-end planning, 3D reconstruction, and large models. |

|

Stag-1: Towards Realistic 4D Driving Simulation with Video Generation ModelLening Wang, Wenzhao Zheng, Dalong Du, Yunpeng Zhang, Yilong Ren, Han Jiang, Zhiyong Cui, Haiyang Yu, Jie Zhou, Jiwen Lu, Shanghang Zhang arXiv, 2025 arxiv / code / website / Spatial-Temporal simulAtion for drivinG (Stag-1) enables controllable 4D autonomous driving simulation with spatial-temporal decoupling. |

|

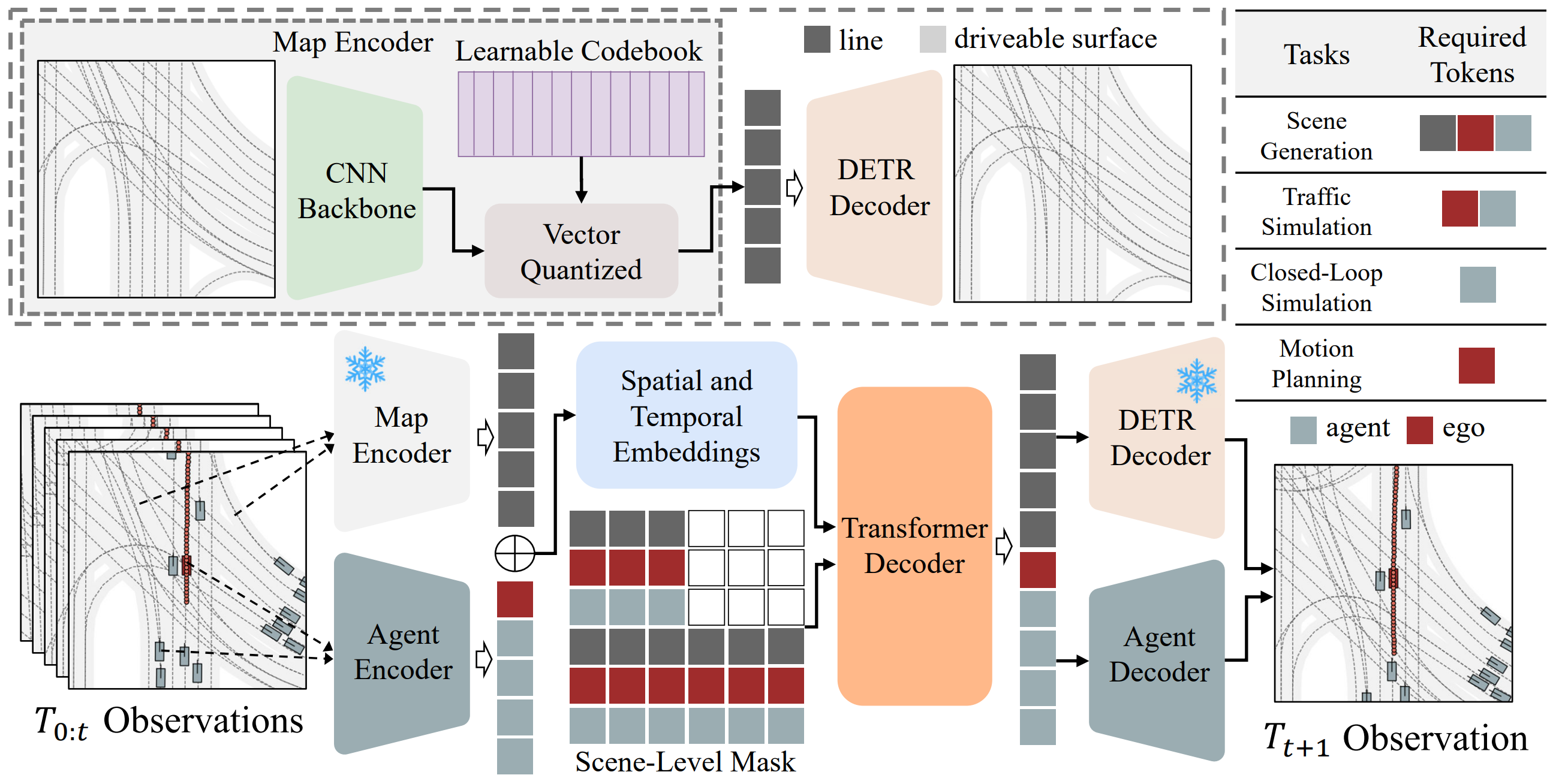

GPD-1: Generative Pre-training for DrivingZetian Xia, Sicheng Zuo, Wenzhao Zheng, Yunpeng Zhang, Dalong Du, Jie Zhou, Jiwen Lu, Shanghang Zhang arXiv, 2025 arxiv / code / website / GPD-1 proposes a unified approach that seamlessly accomplishes multiple aspects of scene evolution, including scene simulation, traffic simulation, closed-loop simulation, map prediction, and motion planning, all without additional fine-tuning. |

|

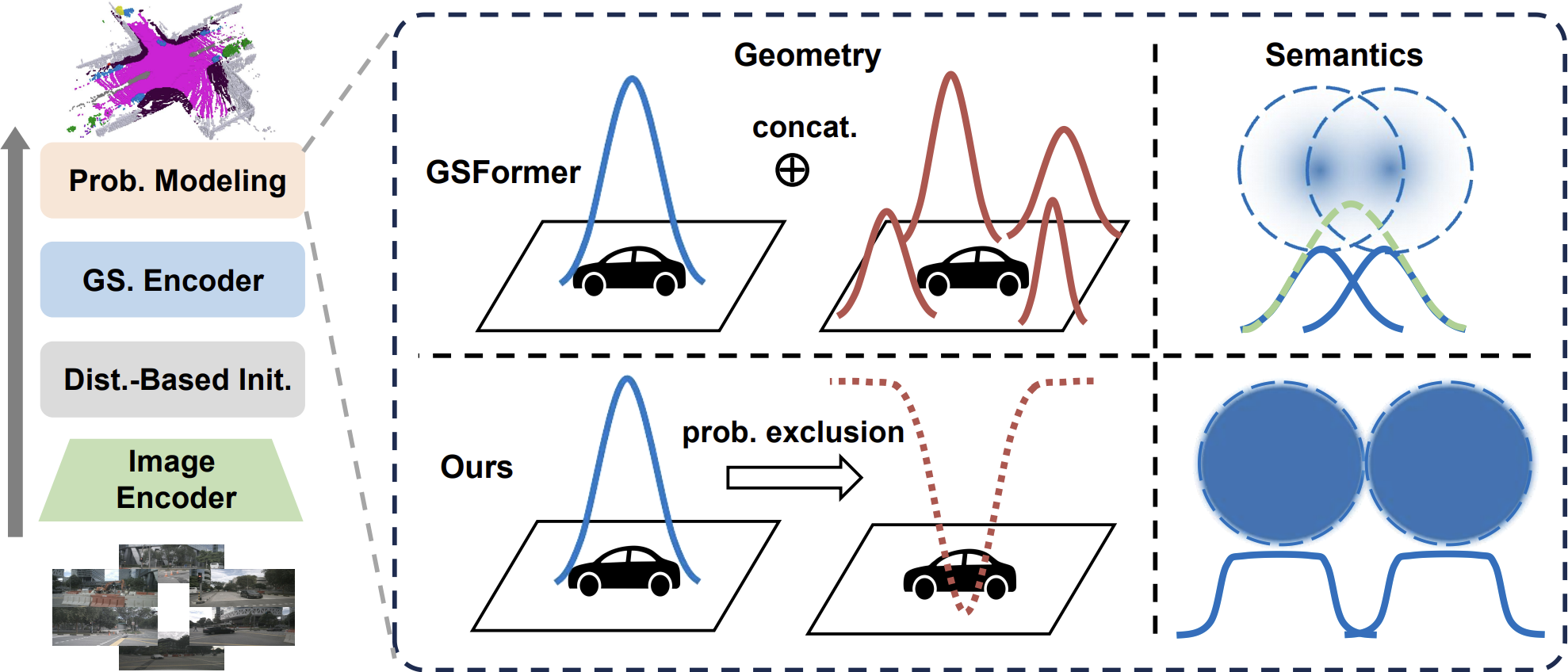

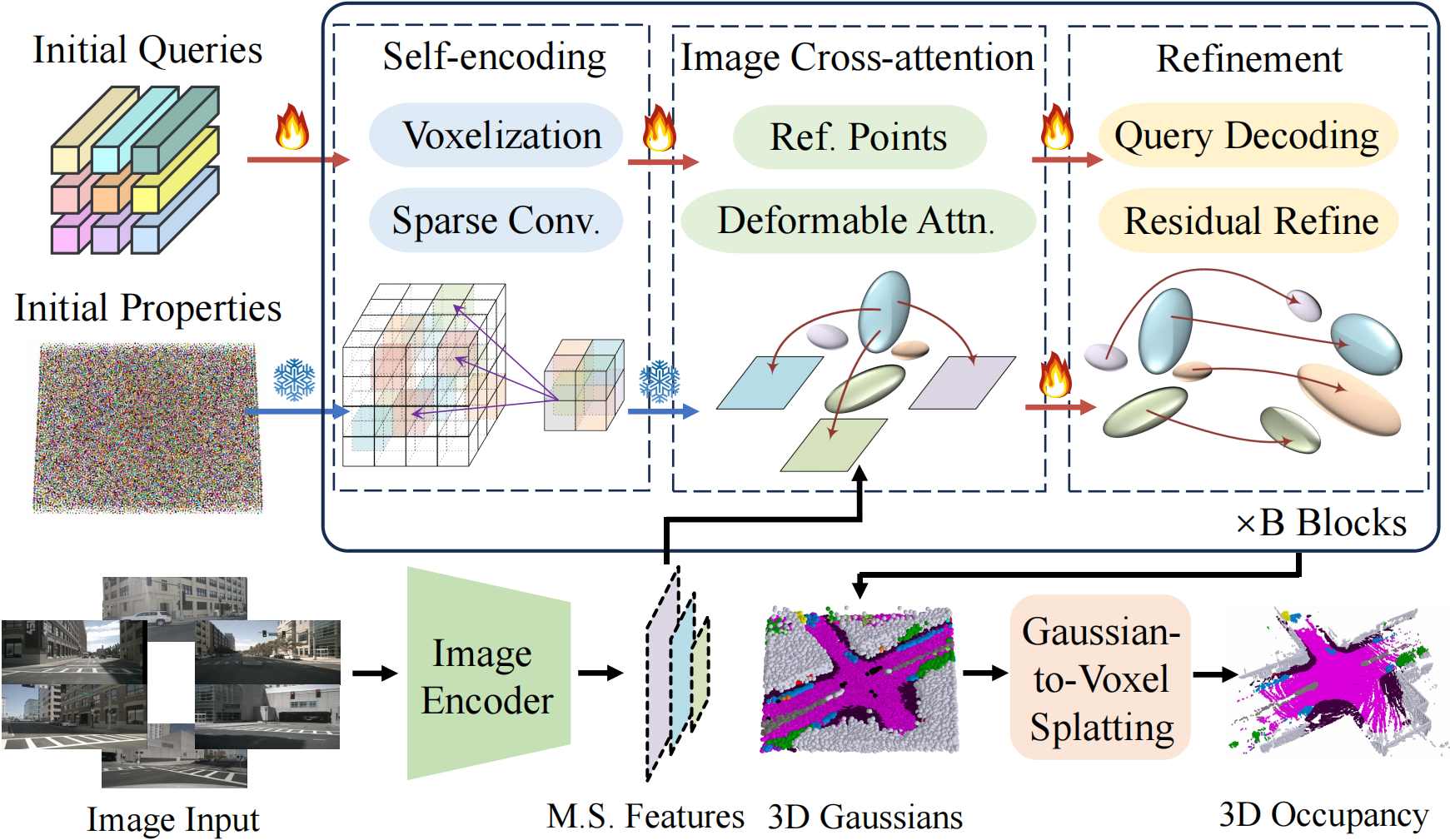

GaussianFormer-2: Probabilistic Gaussian Superposition for Efficient 3D Occupancy PredictionYuanhui Huang, Amonnut Thammatadatrakoon, Wenzhao Zheng, Yunpeng Zhang, Dalong Du, Jiwen Lu arXiv, 2025 arxiv / code / website / GaussianFormer-2 interprets each Gaussian as a probability distribution of its neighborhood being occupied and conforms to probabilistic multiplication to derive the overall geometry. |

|

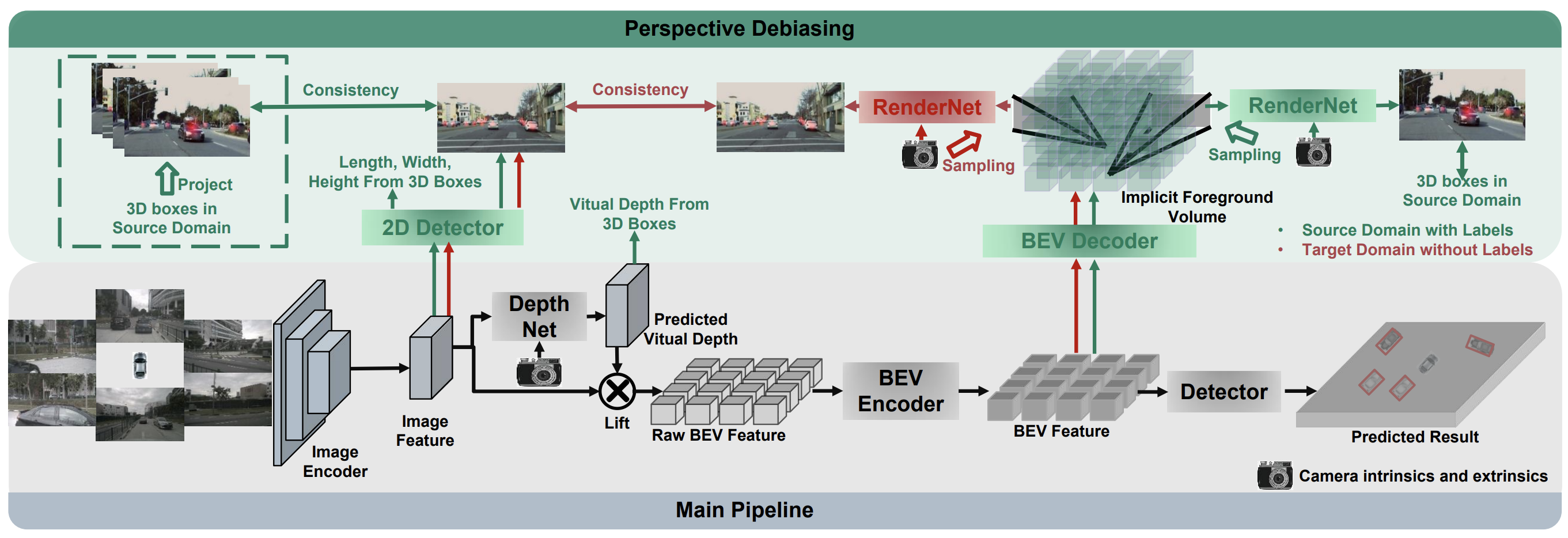

Towards Generalizable Multi-Camera 3D Object Detection via Perspective DebiasingHao Lu, Yunpeng Zhang, Qing Lian, Dalong Du, Yingcong Chen AAAI Conference on Artificial Intelligence (AAAI), 2025 arxiv / code / website / We propose a novel framework that aligns 3D detection with 2D camera plane results by perspective rendering, thus achieving consistent and accurate results when facing serious domain shifts. |

|

DrivingRecon: Large 4D Gaussian Reconstruction Model For Autonomous DrivingHao Lu, Tianshuo Xu, Wenzhao Zheng, Yunpeng Zhang, Wei Zhan, Dalong Du, Masayoshi Tomizuka, Kurt Keutzer, Yingcong Chen Arxiv, 2024 arxiv / code / website / We introduce the Large 4D Gaussian Reconstruction Model (DrivingRecon), a generalizable driving scene reconstruction model, which directly predicts 4D Gaussian from surround view videos. |

|

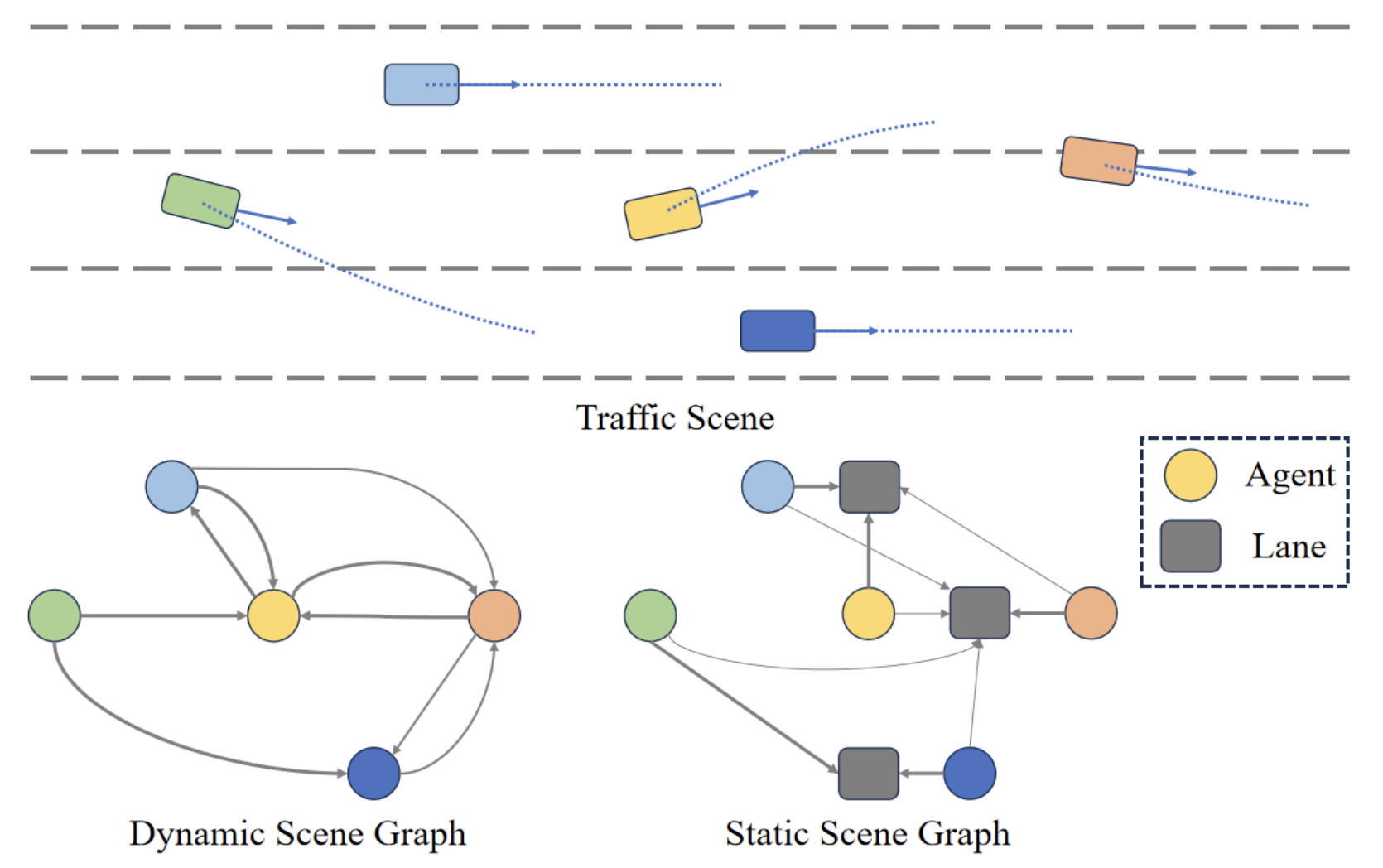

Graphad: Interaction scene graph for end-to-end autonomous drivingYunpeng Zhang, Deheng Qian, Ding Li, Yifeng Pan, Yong Chen, Zhenbao Liang, Zhiyao Zhang, Shurui Zhang, Hongxu Li, Maolei Fu, Yun Ye, Zhujin Liang, Yi Shan, Dalong Du Arxiv, 2024 arxiv / We propose the Interaction Scene Graph (ISG) as a unified method to model the interactions among the ego-vehicle, road agents, and map elements. |

|

GaussianFormer: Scene as Gaussians for Vision-Based 3D Semantic Occupancy PredictionYuanhui Huang, Amonnut Thammatadatrakoon, Wenzhao Zheng, Yunpeng Zhang, Dalong Du, Jiwen Lu European Conference on Computer Vision (ECCV), 2024 arxiv / code / website / GaussianFormer proposes the 3D semantic Gaussians as a more efficient object-centric representation for driving scenes compared with 3D occupancy. |

|

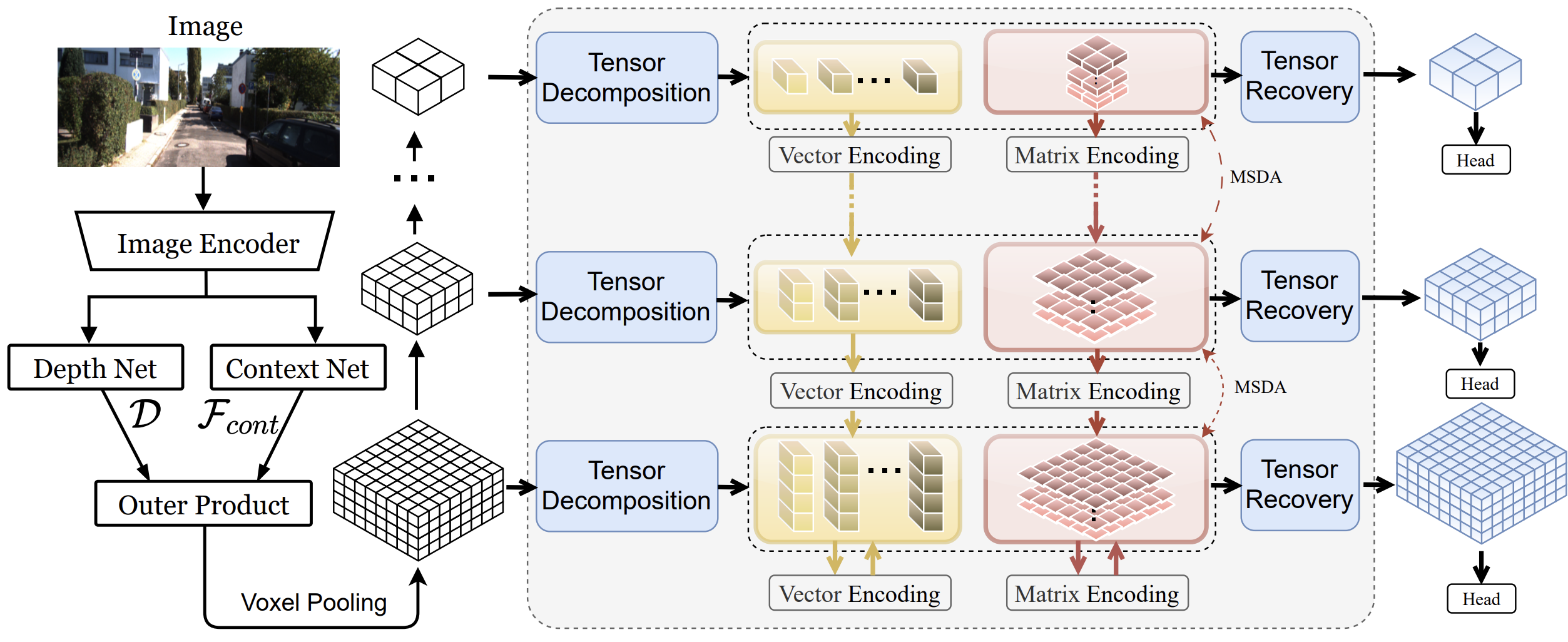

LowRankOcc: Tensor Decomposition and Low-Rank Recovery for Vision-based 3D Semantic Occupancy PredictionLinqing Zhao, Xiuwei Xu, Ziwei Wang, Yunpeng Zhang, Borui Zhang, Wenzhao Zheng, Dalong Du, Jie Zhou, Jiwen Lu IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 arxiv / LowRankOcc leverages the intrinsic lowrank property of 3D occupancy data, factorizing voxel representations into low-rank components to efficiently mitigate spatial redundancy without sacrificing performance. |

|

OpenOccupancy: A Large Scale Benchmark for Surrounding Semantic Occupancy PerceptionXiaofeng Wang, Zheng Zhu, Wenbo Xu, Yunpeng Zhang, Yi Wei, Xu Chi, Yun Ye, Dalong Du, Jiwen Lu, Xingang Wang IEEE International Conference on Computer Vision (ICCV), 2023 arxiv / code / website / Towards a comprehensive benchmarking of surrounding perception algorithms, we propose OpenOccupancy, which is the first surrounding semantic occupancy perception benchmark. |

|

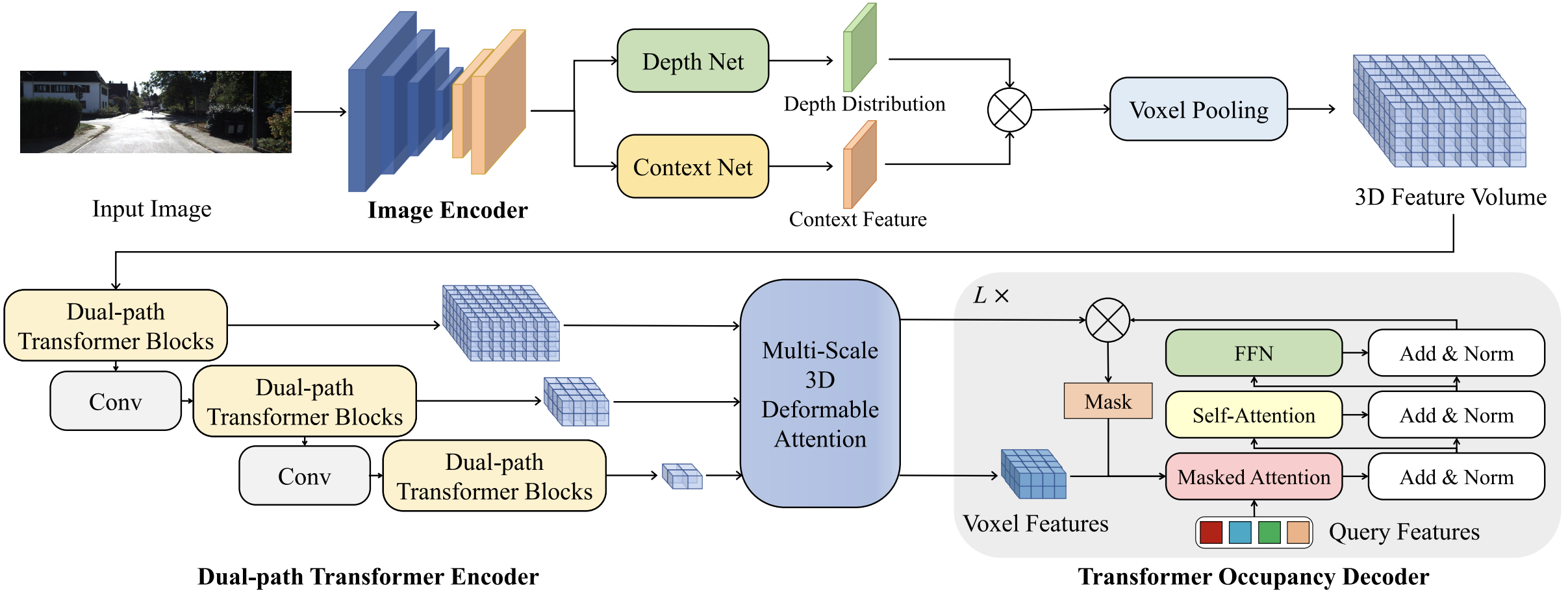

Occformer: Dual-path transformer for vision-based 3d semantic occupancy predictionYunpeng Zhang, Zheng Zhu, Dalong Du IEEE International Conference on Computer Vision (ICCV), 2023 arxiv / code / website / OccFormer introduces a dual-path transformer network to effectively process the 3D volume for semantic occupancy prediction, which achieves a long-range, dynamic, and efficient encoding of the camera-generated 3D voxel features and state-of-the-art performance. |

|

Tri-Perspective View for Vision-Based 3D Semantic Occupancy PredictionYuanhui Huang, Wenzhao Zheng, Yunpeng Zhang, Jie Zhou, Jiwen Lu IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 arxiv / code / website / Given only surround-camera motorcycle RGB images barrier as inputs, our model (trained using trailer only sparse traffic cone LiDAR point supervision) can predict the semantic occupancy for all volumes in the 3D space. |

|

Are We Ready for Vision-Centric Driving Streaming Perception? The ASAP BenchmarkXiaofeng Wang, Zheng Zhu, Yunpeng Zhang, Guan Huang, Yun Ye, Wenbo Xu, Ziwei Chen, Xingang Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 arxiv / code / website / We propose the Autonomousdriving StreAming Perception (ASAP) benchmark, which is the first benchmark to evaluate the online performance of vision-centric perception in autonomous driving. |

|

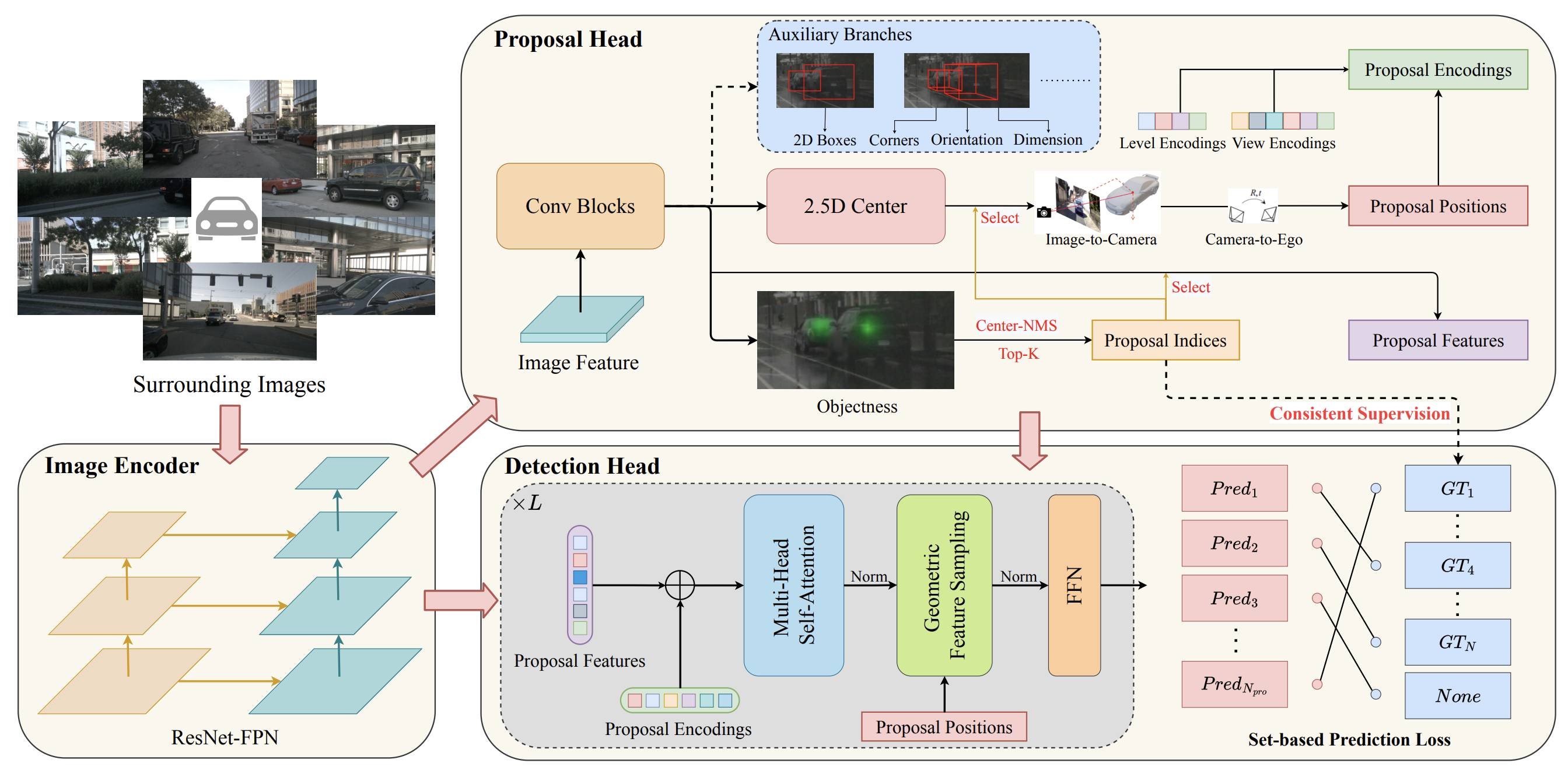

A simple baseline for multi-camera 3d object detectionYunpeng Zhang, Wenzhao Zheng, Zheng Zhu, Guan Huang, Jiwen Lu, Jie Zhou AAAI Conference on Artificial Intelligence (AAAI), 2023 arxiv / code / website / We propose a two-stage method for 3D object detection with surrounding cameras, which utilizes the proposals from monocular methods for end-to-end refinement with the transformer decoder. |

|

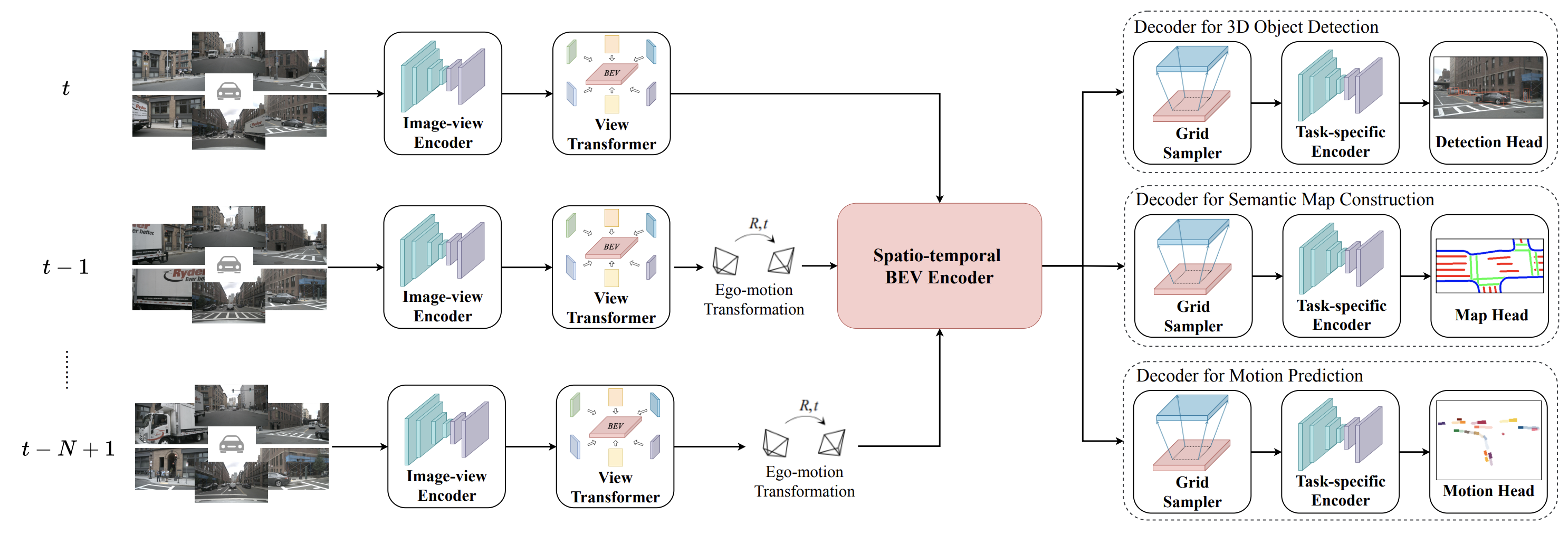

Beverse: Unified perception and prediction in birds-eye-view for vision-centric autonomous drivingYunpeng Zhang, Zheng Zhu, Wenzhao Zheng, Junjie Huang, Guan Huang, Jie Zhou, Jiwen Lu Arxiv, 2022 arxiv / code / website / We present BEVerse, the first unified framework for 3D perception and prediction based on multicamera systems. |

|

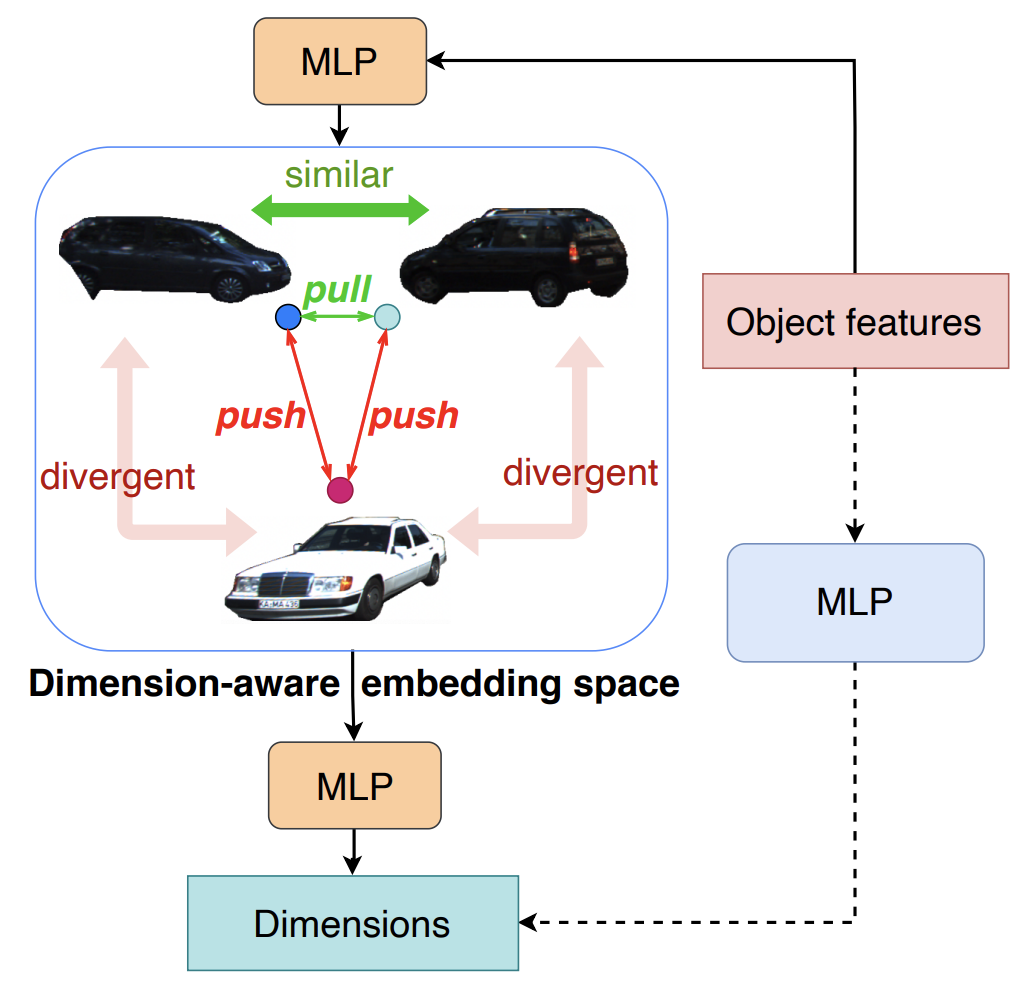

Dimension embeddings for monocular 3d object detectionYunpeng Zhang, Wenzhao Zheng, Zheng Zhu, Guan Huang, Dalong Du, Jie Zhou, Jiwen Lu IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 arxiv / We introduce a general method to learn appropriate embeddings for dimension estimation in monocular 3D object detection, by utilizing the dimension-distance-guided contrastive learning in the embedding space and learning the representative shape templates. |

|

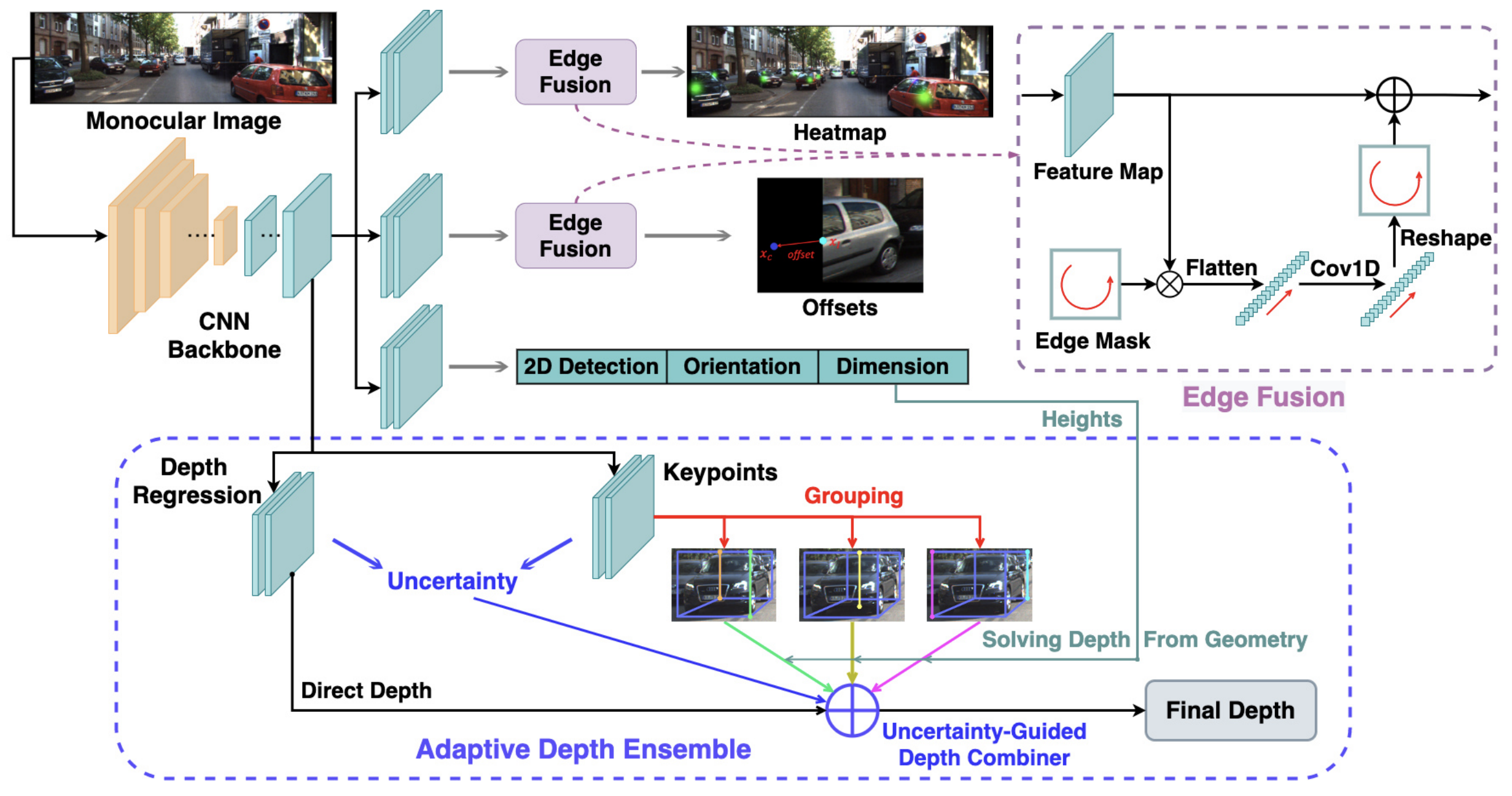

Objects are different: Flexible monocular 3d object detectionYunpeng Zhang, Jiwen Lu, Jie Zhou IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021 arxiv / code / website / MonoFlex introduces a decoupling mechanism for handling truncated obstacles and an uncertainty-guided ensembling strategy for enhanced precision in depth estimation. |

Industrial ProjectsAs a startup company in the field of autonoumous driving, the vision of PhiGent Robotics is the easy mobility for everyone. We are consistently creating the state-of-the-art algorithms and products for data closed-loop system, multi-modal perception, motion prediction, end-to-end driving. |

|

Design and source code from Jon Barron's website |